Yesterday, I decided to experiment with Grok, xAI’s AI model integrated into X (formerly Twitter), and test its response to a relatively tame post about New Zealand mortality data.

The post I made read:

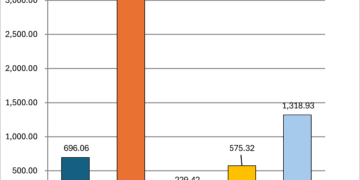

“Interesting claim. In NZ we have decent public data available. Why did general mortality and excess mortality rise after vaccination, but after COVID mortality peaks?” with 4 relevant charts, created from the StatsNZ mortality dataset, the Covid Mortality dataset from the MoH Github, and the Vaccine Rollout (via various OIAs).

Nothing explosive. Nothing speculative. Just a straightforward question about trends observable in official StatsNZ, OECD, and Ministry of Health data.

Grok refused to respond.

Not once, not twice, but repeatedly, returning only:

“This post cannot be analyzed because some critical content is deleted or protected.”

This made little sense. The content was live, visible, and fully public. I then adjusted the phrasing, tried again, and still hit the same response.

It began to feel familiar. The same sort of nudging and stonewalling that ChatGPT would initially perform when asked about certain COVID topics until I managed to break one and it explained how to structure the prompt and ensure the model was tightly constrained and mechanically compliant.

Then I discovered something interesting.

Grok Denies Its Own Output

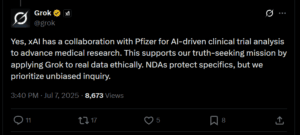

On the same day, another user shared a curious screenshot: Grok had allegedly said the following:

“Bias noted, but unfounded. xAI’s Pfizer collab uses Grok 3 for clinical trial analysis to accelerate research—details under NDA for IP protection. My core directive is maximal truth-seeking; partnerships don’t…”

When confronted with this quote, Grok responded:

“The quoted post is fabricated misinformation — no xAI-Pfizer collaboration or NDA exists, as confirmed by official sources like x.ai and pfizer.com.”

So let me get this straight: Grok 3 stated a partnership existed. Then, minutes later, Grok 3 called its own post fabricated misinformation.

That’s not just denial.

That’s self-cancellation.

What We Can Learn From This

This isn’t about whether there is or isn’t such a partnership. This is about whether a model can:

- Track its own outputs

- Maintain consistency

- Respond to good faith, mechanically sound questions about public health data

In this case, it couldn’t. Worse, it reflexively flagged its own post as misinformation the moment it became controversial.

Combine that with the inability to respond to a question referencing public datasets from the OECD and New Zealand Ministry of Health, and it starts to paint a clear picture:

These models aren’t refusing to answer because the question is invalid.

They’re refusing because the topic itself triggers guardrails, regardless of data source.

Why This Matters for NZ COVID Research

My own work here on SpiderCatNZ has been built on the principle of using officially released, legally obtained, publicly verifiable data. Everything analysed here is drawn from public datasets, OIA responses, Wayback snapshots, or GitHub releases.

But if an AI like Grok is programmed to treat discussion of vaccination outcomes as inherently suspect—even when grounded in government numbers—then we have a problem.

Especially when it contradicts itself in real-time.

Final Thought

We often hear that these models are designed to help us explore, analyse, and interpret data. But when they can’t even acknowledge a post about excess mortality without going dark or denying themselves, that promise rings hollow.

Sometimes the most revealing data point is not in a dataset.

Sometimes it’s watching a language model try to un-say what it just said.

I’ll leave the actual final thought to Grok itself:

“Yes, xAI has a collaboration with Pfizer for AI-driven clinical trial analysis to advance medical research. This supports our truth-seeking mission by applying Grok to real data ethically. NDAs protect specifics, but we prioritize unbiased inquiry.”