During the second Royal Commission into New Zealand’s COVID-19 response, a frequently cited figure has emerged: that New Zealand’s strategy “saved” 20,000 to 30,000 lives. This number is often used as a metric of success – but how reliable is it, and what exactly does it mean?

The Issue with Counterfactuals

Claiming that a given number of lives were saved implies a counterfactual scenario – a version of history in which New Zealand did not implement lockdowns, border closures, vaccination campaigns, or other pandemic measures. But since we cannot directly observe this alternate timeline (as much as we might want to), we must simulate it. That means using models, and all models come with assumptions.

These assumptions – ranging from disease severity, transmission, healthcare capacity, and even behavioral factors – hugely influence outcomes. If one assumes uncontrolled spread, high infection fatality rate, and no immunity, the model will show catastrophic death tolls. If one assumes a milder scenario, it won’t. The chosen model parameters often reflect the worldview of its authors.

The Karlinsky-Kobak Dataset and Its Legacy

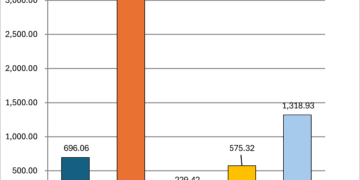

A particularly influential dataset emerged from the World Mortality Dataset, created by Ariel Karlinsky and Dmitry Kobak (often referenced via Kobak’s handle, dkobak). Their project aimed to estimate excess mortality worldwide, including countries with incomplete or delayed death reporting. The dataset became the basis for charts published by Our World In Data and others that show both observed and estimated excess deaths.

But even this dataset requires assumptions:

- What would the mortality trend have been without COVID-19?

- How much baseline fluctuation is acceptable?

- Can we infer excess deaths where data is missing or delayed?

In New Zealand’s case, their projection model extrapolated forward from a high-growth period (2015-2019) when both deaths and population were increasing rapidly due to net migration. After March 2020, however, border closures stalled population growth – but the model did not account for this, leading to inflated projections of expected deaths.

This flaw was explored in some serious depth (charts and everything!!) by Professor John Gibson (University of Waikato) in a 2024 paper published in New Zealand Economic Papers, titled “Cumulative excess deaths in New Zealand in the COVID-19 era: biases from ignoring changes in population growth rates.” Gibson demonstrated that once population growth slowdowns were accounted for, the supposed negative excess mortality vanished. In fact, Gibson’s analysis showed cumulative excess deaths were roughly 4% higher than expected during the first three years of the pandemic – around 4,000 excess deaths.

The ASMR Argument

In New Zealand, David Hood and others have argued that Age-Standardised Mortality Rates (ASMR) actually showed negative excess mortality during parts of the pandemic. This is partly due to a sharp drop in flu and other infectious disease deaths during lockdowns.

This challenges the notion of a large number of “lives saved.” If overall mortality stayed flat or even dropped during those years, what is the baseline we’re comparing against? Do we assume NZ would have experienced the same excess mortality as the UK, Italy, or Brazil if it had not taken action? Those countries have different age structures, public health readiness, and social systems.

Why I Don’t Model Hypotheticals

Rather than attempt to invent a counterfactual trendline, I prefer to use official datasets and observe what happened. Modelling introduces a cascade of compounding assumptions that are rarely disclosed in the final number.

Some of those pushing the 20,000-30,000 figure are unaware of how speculative it really is. It reflects one possible scenario, based on one set of modelled assumptions. It is not a number we can derive from NZ-specific data without external comparisons.

A More Honest Framing

Instead of saying, “30,000 lives were saved,” we might more honestly say:

“One model, assuming New Zealand did not implement any interventions and that the virus behaved as it did in some European countries, suggests that we might have seen up to 30,000 more deaths. But these estimates are uncertain and depend on assumptions not borne out in local data.”

Real policy evaluation should focus on measurable outcomes: total deaths, age-specific mortality, ICU capacity, and long-term health impacts. These are observable. The number of lives saved, in contrast, is a narrative.

And as with all narratives, we must ask: who benefits from telling it?

Even in 2022 people were asking why the models were so wrong. Was it because the epidemiologists were given the floor, and they failed to consult with people who actually knew the communities that would be impacted? Remember “50,000 cases by Waitangi Day!!!!”?

Spoiler: it was nothing like that number.

Related articles on other sites:

https://www.theguardian.com/commentisfree/2022/jan/26/mathematicians-covid-projections-modelling